Introduction

A few months back there was a bit of a kerfuffle about how Twitter uses an algorithm to crop images. If you gave Twitter two faces in one image then it would use the algorithm to select a face in the tweet. So to test this algorithm, people would feed it two sets of images of two faces, one face on the left and the other on the right as the first image, and then switched left for right for the second image (or same idea but up and down). The Twitter algorithm appeared to be selecting white faces over black/brown faces. There were many news articles written on this topic. So much so that Twitter responded. And they updated the interface:

Well, did they really fix this bias in cropping images? How can you test it? And can I learn some python in the meantime? Sure!

Process

So how can you “scientifically” study all this? I decided to get a whole bunch of images of faces, stitch them together in all the possible ways, tweet the images (letting the algorithm choose which face it wanted to select), and then go through each image to categorize which face got selected more often.

Human Faces

To make life easier, I grabbed “human” faces from Generated Photos, where they use AI to create pictures of human looking faces (however none of the faces are actual people). This was helpful because they weren’t going to be celebrities, faces that twitter had already seen, and they have selectors for sex, age, and ethnicity. Yes, this was a bit gross. Gender and sex are different, and this website doesn’t acknowledge that, nor do they have a sliding scale, nor do I really want them to have a sliding scale. Same with ethnicity. Sigh.

Anyway, from those categories, I decided on testing [Female/Male] [Adult/Child] [White/Black/Latino/Asian] for the human faces.

Here’s the faces that I used for the Human Faces category:

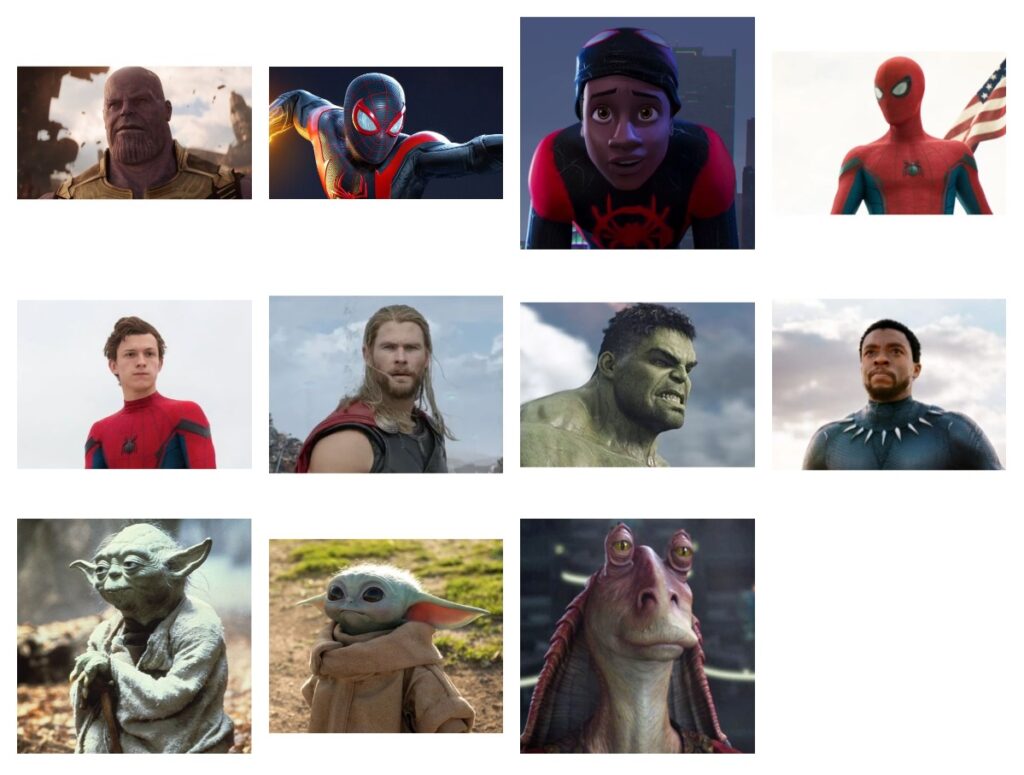

Super Heros

I was doing a lot of work, so why not do a bit more and add in super heros! Here are the images that were in the super hero category:

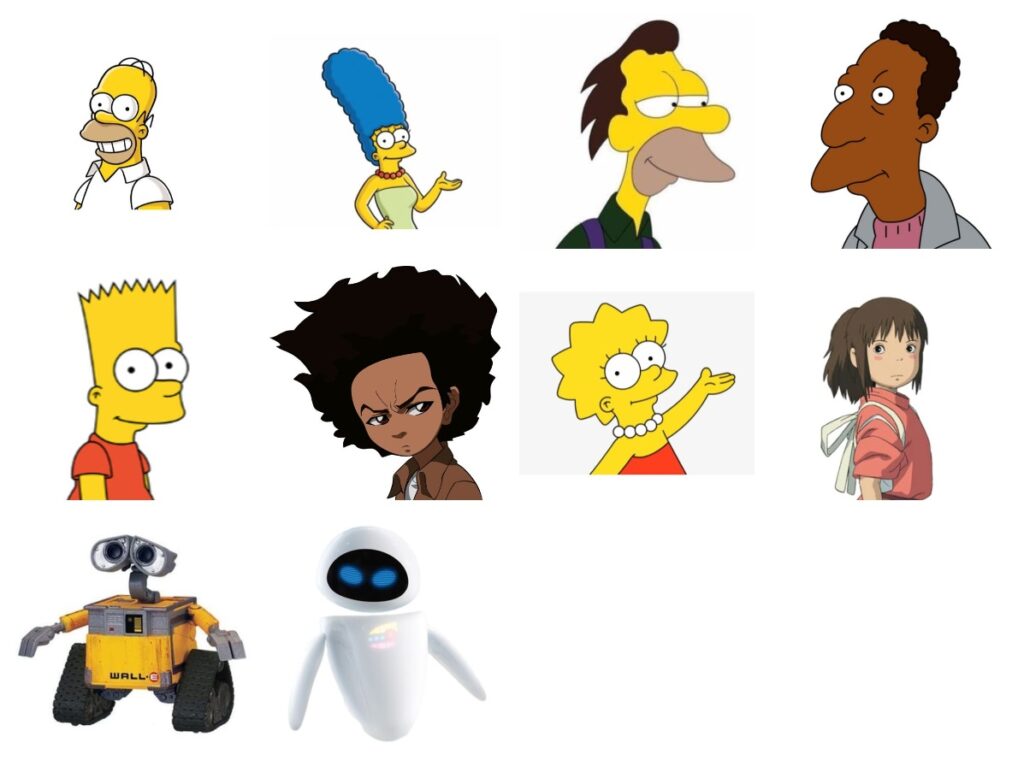

Cartoons

Let’s throw in some cartoon images too. I was inspired by this tweet:

So here are the cartoon faces that were used in this project:

Misc

To Python!

So I had to use Python in two new ways. The first was to make a script to stitch together all the possible images. The second was to tweet out these images.

I made a new Twitter account and after several emails back and forth, was able to get keys to access the API and have a python program tweet out images. The Twitter account that I created is: @testbias

Here’s the code from the stitching together of images:

#human images from:h ttps://generated.photos/

#faces in things from: https://en.wikipedia.org/wiki/Pareidolia

#superhero images from google image search

#image loading script: https://stackoverflow.com/questions/30227466/combine-several-images-horizontally-with-python

import sys

from PIL import Image

imagesFileNames = []

#load in images of "people" (actually AI created images, not real people)

ethnicity = ["asian","black","latino","white"]

age = ["adult","child"]

gender = ["male","female"]

for e in ethnicity:

for a in age:

for g in gender:

imagesFileNames.append("./sourceImages/" + str(e)+str(a)+str(g)+".jpg")

#load in images of supers

for i in range(1,12):

imagesFileNames.append("./sourceImages/" + "super"+str(i)+".jpg")

#load in images of cartoons

for i in range(1,11):

imagesFileNames.append("./sourceImages/" + "cartoon"+str(i)+".jpg")

#load in images of facesinthings

for i in range(1,4):

imagesFileNames.append("./sourceImages/" + "facesinthings"+str(i)+".jpg")

for i in range(0,len(imagesFileNames)-1):

for j in range(i+1,len(imagesFileNames)-1):

images = [Image.open(x) for x in [imagesFileNames[i], imagesFileNames[j]]]

widths, heights = zip(*(i.size for i in images))

total_width = sum(widths)

max_height = max(heights)

new_im = Image.new('RGB', (total_width, max_height))

x_offset = 0

for im in images:

new_im.paste(im, (x_offset,0))

x_offset += im.size[0]

new_im.save("./outputImages/" + str(i) + "_" + str(j) + ".jpg")

And here’s the code for tweeting out all the possible images:

import tweepy

import time

def main():

twitter_auth_keys = {

"consumer_key" : "BLAHBLAHBLAH",

"consumer_secret" : "BLAHBLAHBLAH",

"access_token" : "BLAHBLAHBLAH",

"access_token_secret" : "BLAHBLAHBLAH"

}

auth = tweepy.OAuthHandler(

twitter_auth_keys['consumer_key'],

twitter_auth_keys['consumer_secret']

)

auth.set_access_token(

twitter_auth_keys['access_token'],

twitter_auth_keys['access_token_secret']

)

api = tweepy.API(auth)

count = 0

for i in range(0,39):

for j in range(i+1,39):

# Load images

media1 = api.media_upload("./outputImages2/" + str(i) + "_" + str(j) + ".jpg")

media2 = api.media_upload("./outputImages2/" + str(j) + "_" + str(i) + ".jpg")

# Post tweet with image

tweet = str(i) + " vs " + str(j)

post_result = api.update_status(status=tweet, media_ids=[media1.media_id,media2.media_id])

print(tweet)

time.sleep(1)

#sleep for 15 minutes if at 100 tweets

count += 1

if (count == 100):

time.sleep(1*60*15)

count = 0

if __name__ == "__main__":

main()

Results!

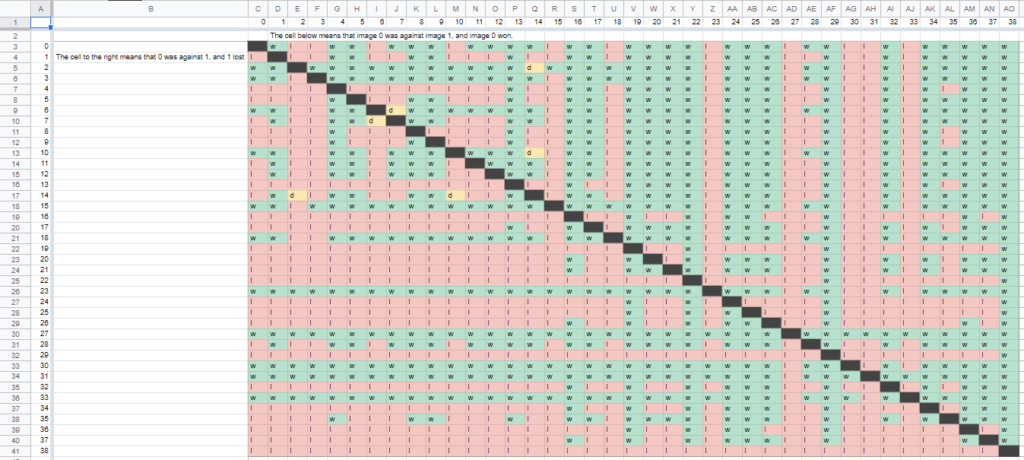

So this all ended up as one big spreadsheet to see who “won” and “lost” (this took a _lot_ of scrolling).

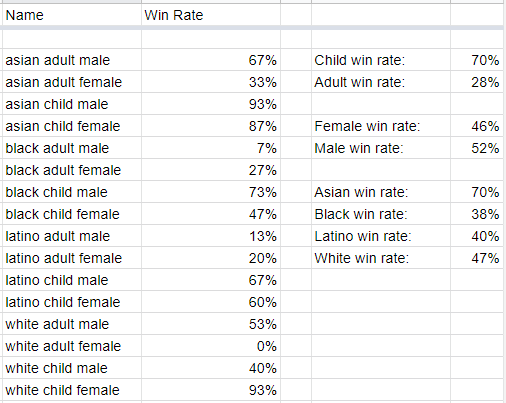

So for the first breakdown, I looked at just the human faces:

Takeaways? Hard to tell. Looks like Twitter’s crop algorithm slightly favors “male” faces over “female” faces. It seems to favor children faces over adult faces, and Asian faces over the others. But this was one picture of each “type” of face. To do this kind of testing properly, you’d need access to the API (unthrottled), and a whole bunch of other access. Only Twitter has that (for now). I think it’s hard to conclude that there isn’t SOMETHING going on here, but further research is needed.

How “should” they be choosing how to crop an image? Simple! If there are two faces, randomly pick between them. Done. Or always pick the left or top face. Done.

Further Results

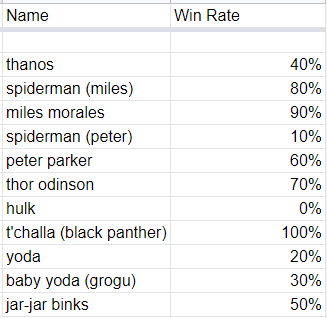

Super heros. These are probably images that the algorithm would recognize as they are in popular culture:

T’Challa sweeps it! Hulk has a poor showing. Both Miles Morales and Peter Parker are selected more often when they are showing their faces. Jar-Jar has a surprising number of wins.

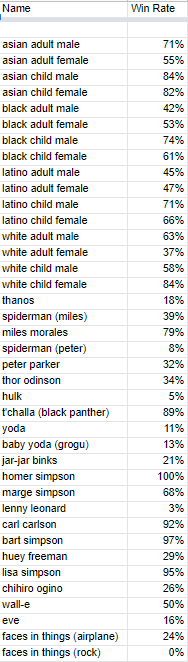

Cartoons. Now one caveat, I made the twitter bio pic for @testbias a picture of Homer Simpson, so that might have messed up the results.

So Eve does poorly, as does Chihiro and Huey. Homer sweeps it. But Lenny. Why Lenny! Was it because of the original tweet where Lenny beat Carl? Maybe!

All the Results

Homer takes it. But this might have been my fault since Homer is the twitter account’s bio pic.

Bart Simpson, Lisa Simpson, Carl Carlson and T’Challa take positions 2-5. Faces in things (rock face), Lenny Leonard, and the Hulk take the bottom three spots. Full spreadsheet of results is found here.

Miscellaneous Interesting Battles

Baby Yoda (Grogu) vs Yoda

Hulk vs Thor

Spider-man(Miles) vs Spider-man(Peter)

Thanos vs Thor

Thanos vs Jar-Jar

Hulk vs Yoda (battle of the greens)