This post is part of the Virtual Conference on Mathematical Flavors, and is part of a group thinking about different cultures within mathematics, and how those relate to teaching. Our group draws its initial inspiration from writing by mathematicians that describe different camps and cultures — from problem solvers and theorists, musicians and artists, explorers, alchemists and wrestlers, to “makers of patterns.” Are each of these cultures represented in the math curriculum? Do different teachers emphasize different aspects of mathematics? Are all of these ways of thinking about math useful when thinking about teaching, or are some of them harmful? These are the sorts of questions our group is asking.

Anna Blinstein and Michael Pershan asked us to respond to a prompt so we could have a set of posts for this Virtual Conference. The essay was “The Two Cultures of Mathematics,” by mathematician W. T. Gowers. Gowers breaks down pure mathematicians into essentially two categories based on their beliefs:

- The point of solving problems is to understand mathematics better.

- The point of understanding mathematics is to become better able to solve problems.

Caveat: I didn’t read the essay in much depth, and I’m sure that I’m purposely misinterpreting his conclusions. That’s ok with me, but don’t go rioting outside Gowers’ door because of this silly blog post.

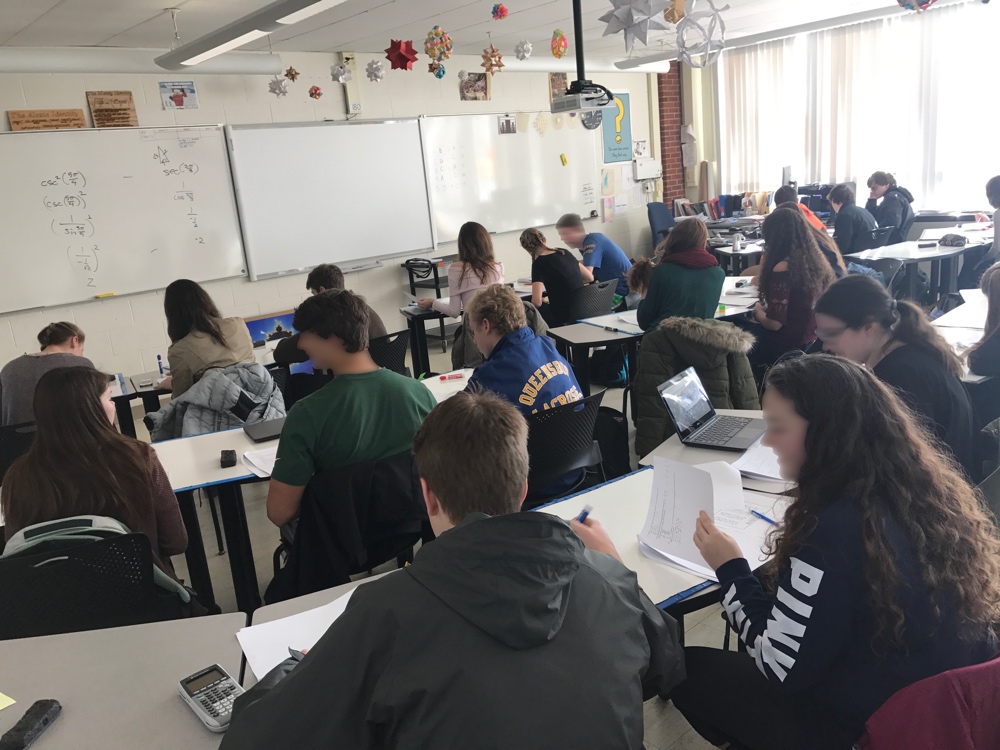

I find it interesting for Gowers to define the culture of math and hence the culture of mathematicians with the *solving problems* lens. While it’s not an inclusive definition of what a mathematician, I’m ok with that, it’s a good place to start. But am I a mathematician by this definition? I don’t solve novel math problems, I’ve only ever solved problems that someone else has already solved (with exception of something personal, like how long can I make this $20 last at the horse track). Nor do I build theory or structure to help others solve novel problems. I teach teenagers how to use centuries and sometimes millenia old math to solve problems that have already been solved.

At best I’m a mathematician once removed, I teach those who might go on to solve novel problems on their own (although, as far as I know, none of the roughly thousand students that I’ve taught have done so). I don’t think that I’m one of the shoulders that these students are standing on if they become mathematicians, but I am lending a hand for them to boost up to a slightly higher position.

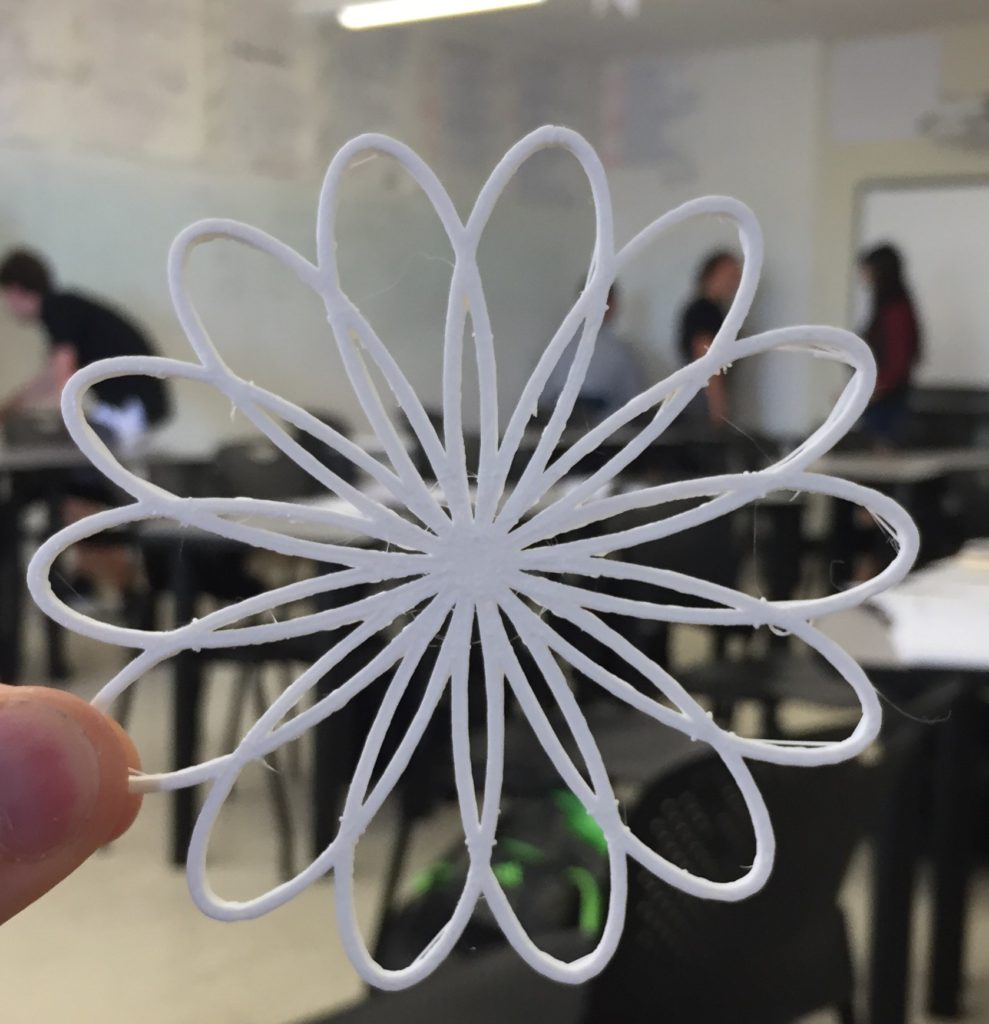

And I’m ok with this definition of a mathematician, it doesn’t bother me much one way or the other. I play with math. I make things with math. I teach math. But I’m certainly not solving new problems or creating structure to solve those problems.

Why am I ok with this? Because when I solve a problem that I’ve never solved before, it’s still new to me. It doesn’t take too much of the enjoyment away from me to know can I buy prednisone over the counter in USA that millions of people have already solved this problem. Likewise, I don’t find it less enjoyable to hike a mountain that someone has already conquered: it’s still a new view for me to take in, a challenge for my body to take on, etc.

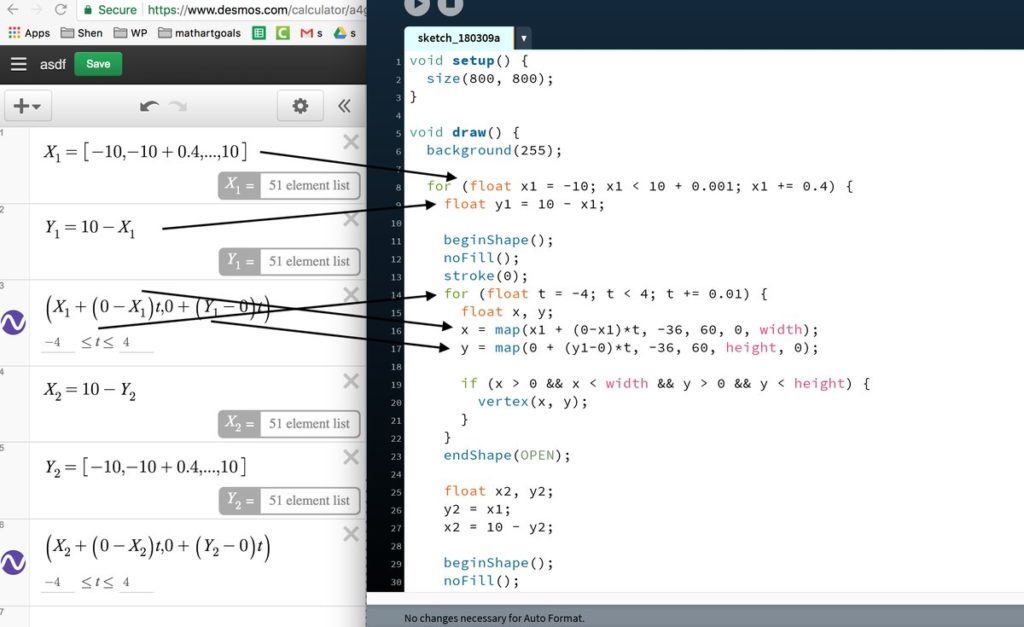

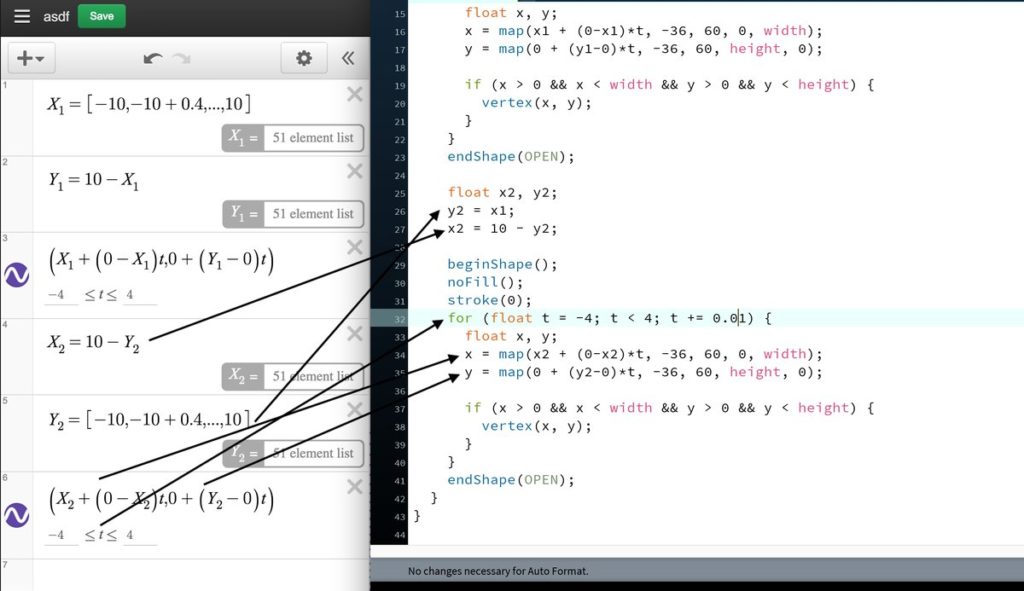

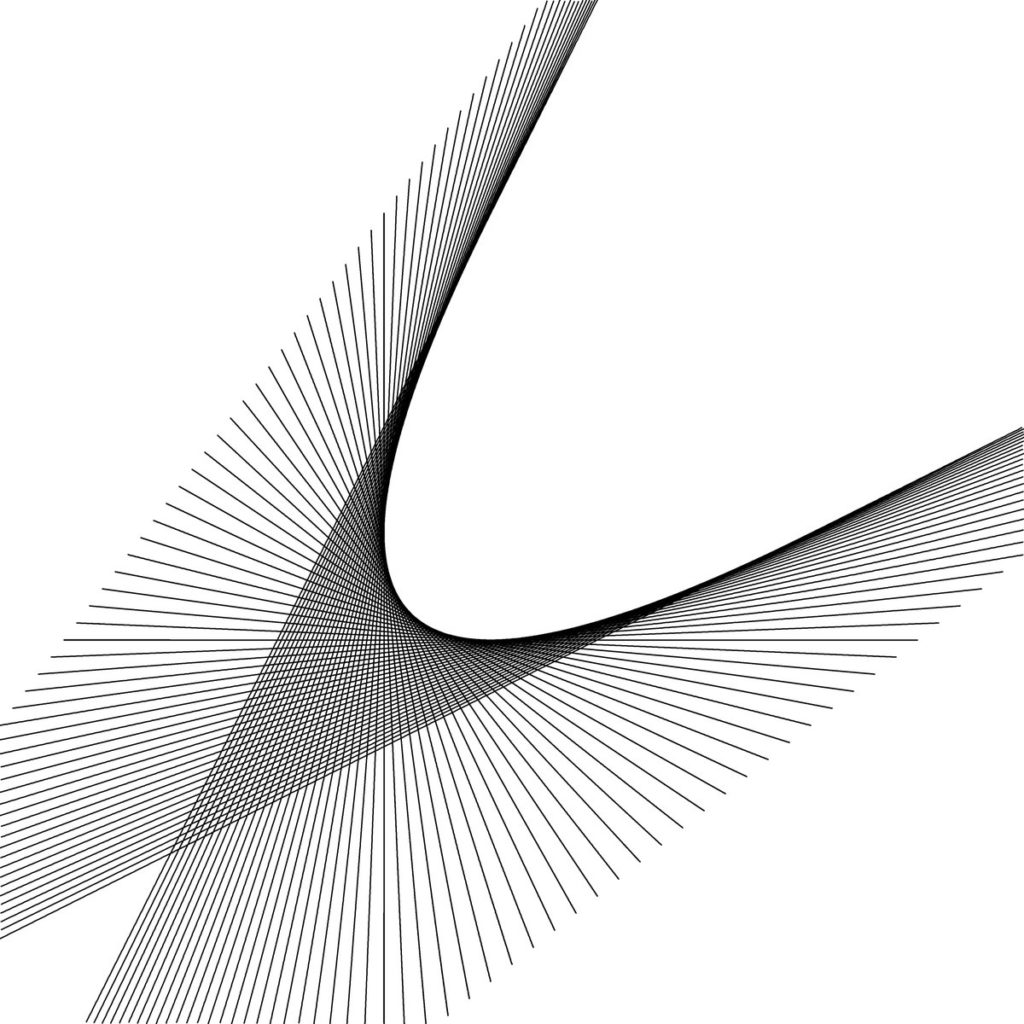

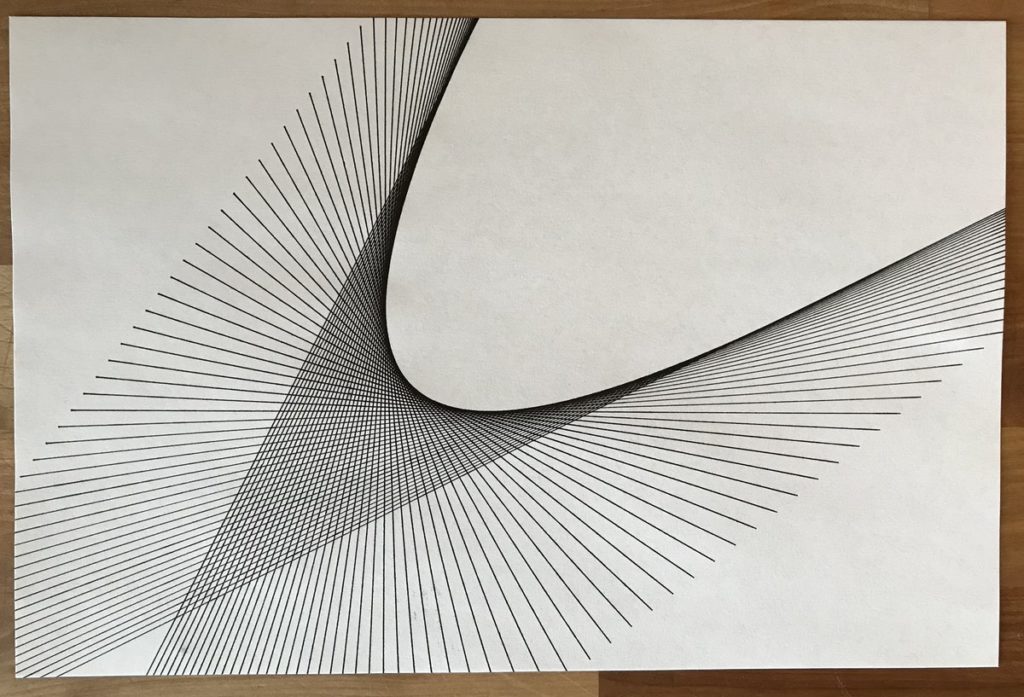

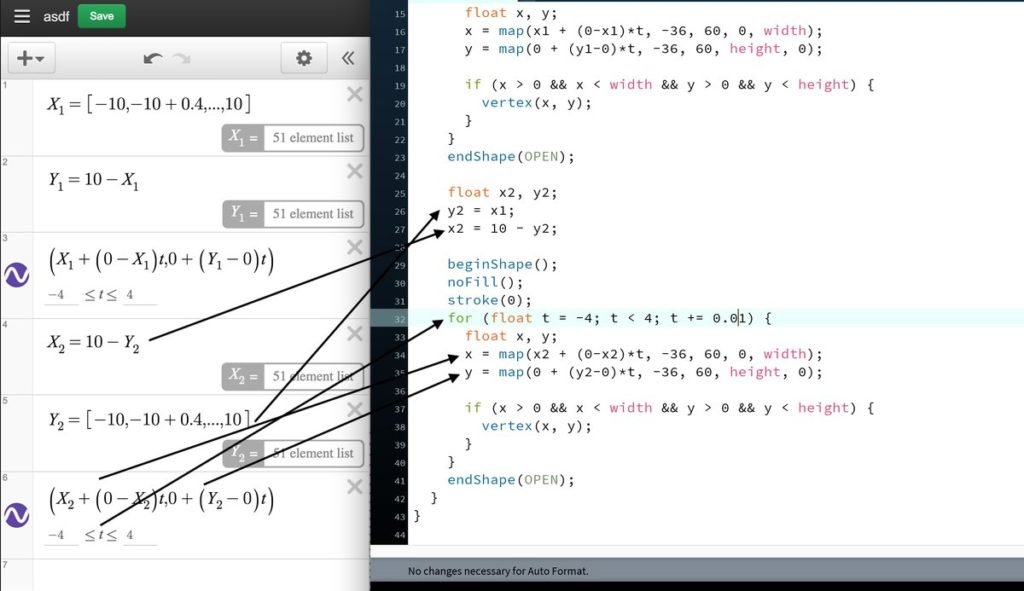

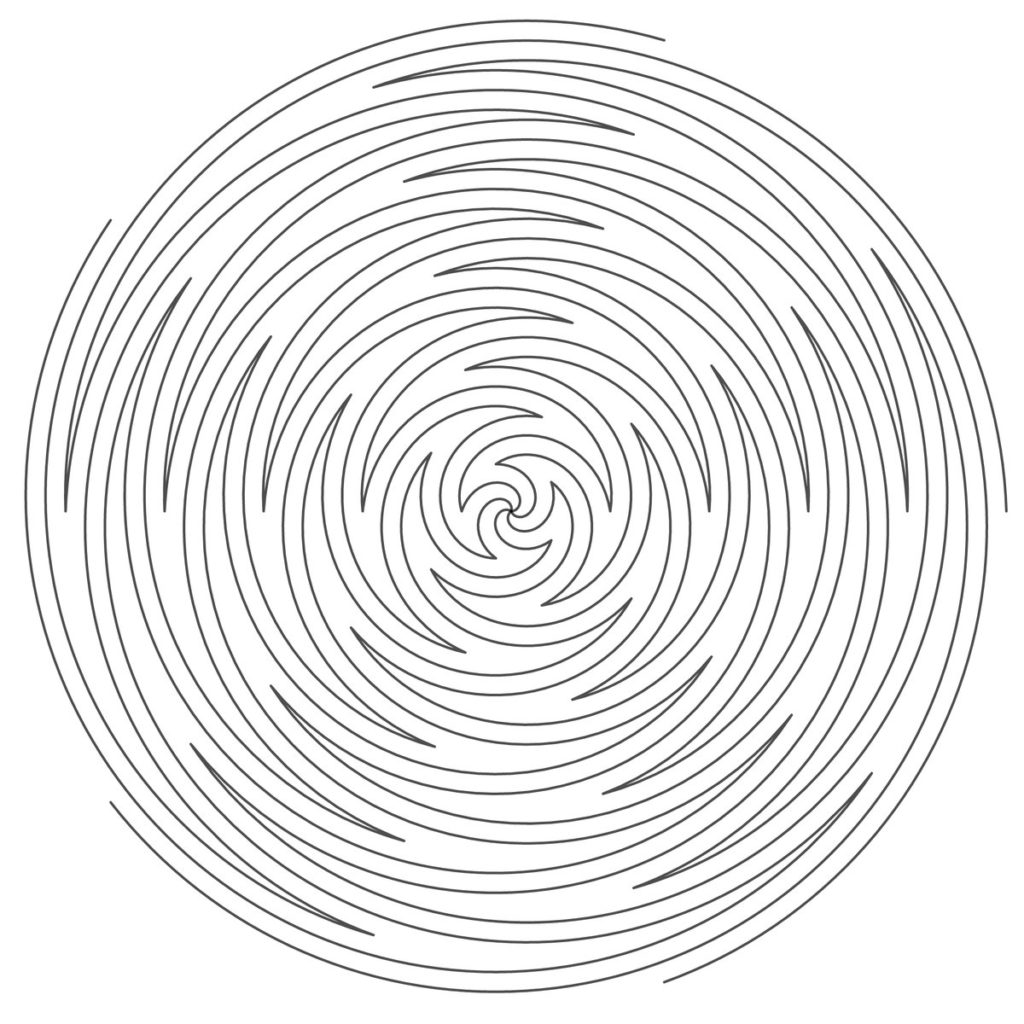

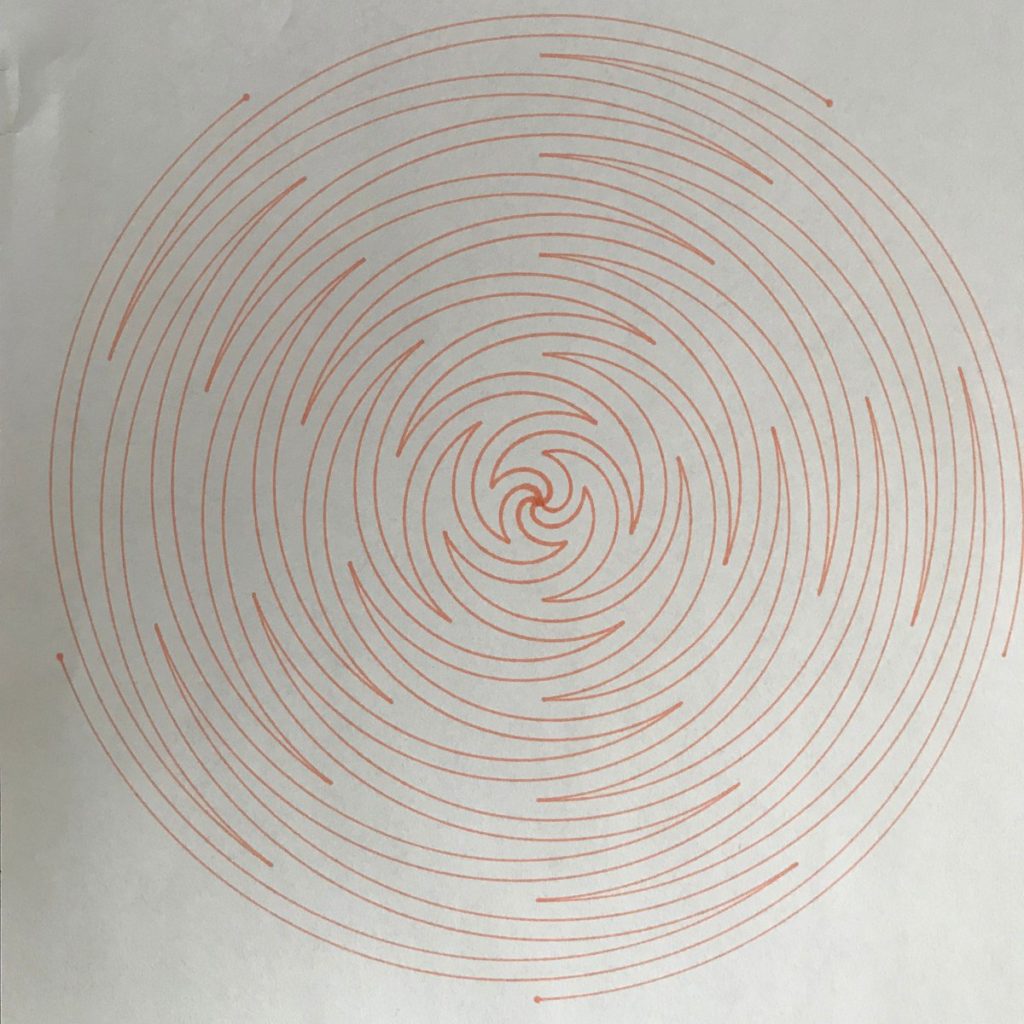

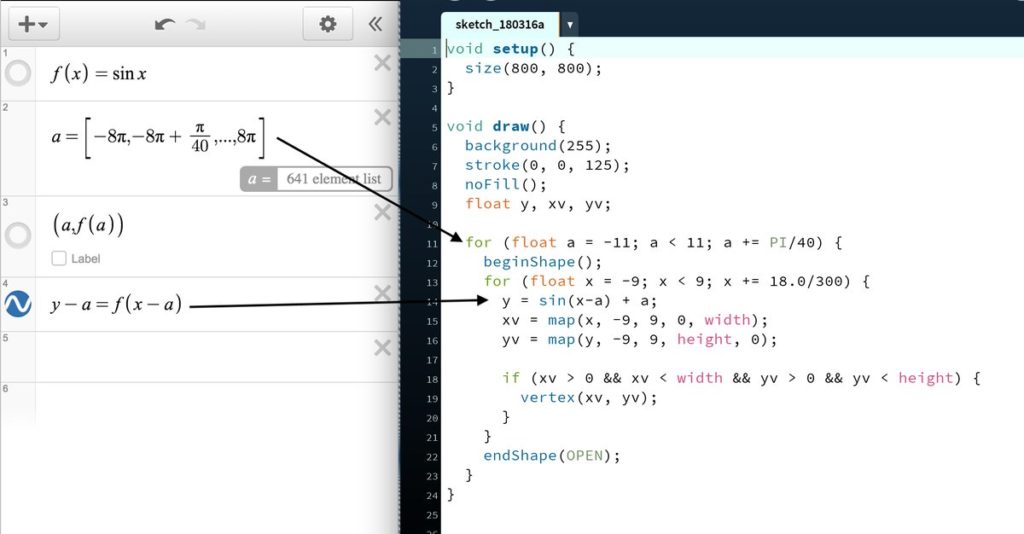

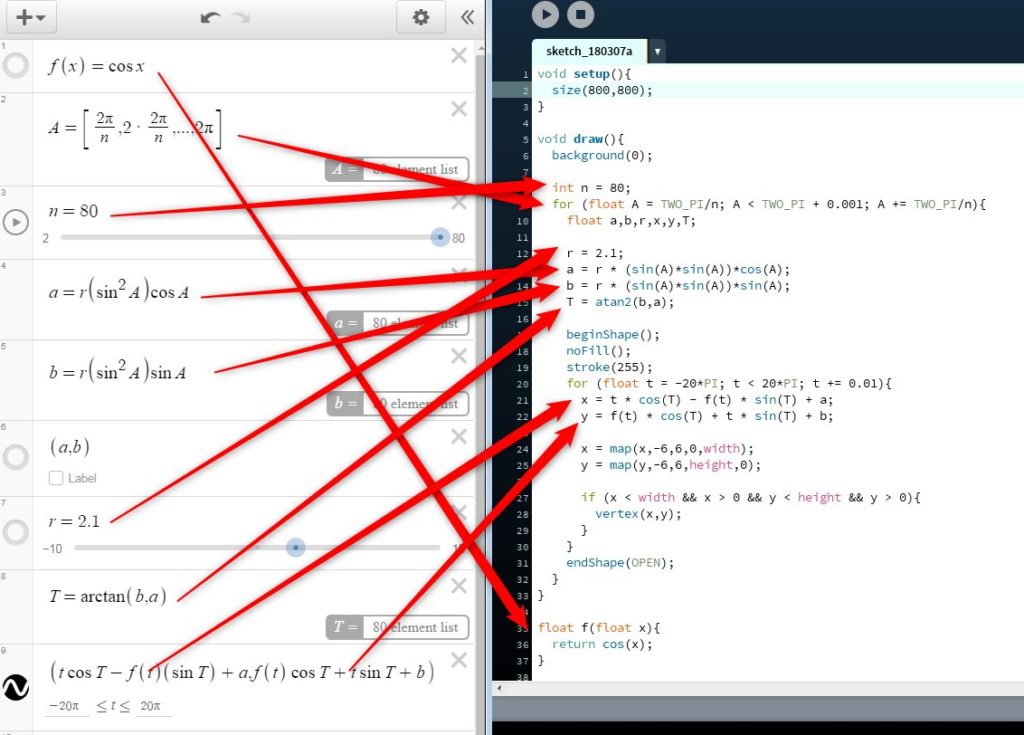

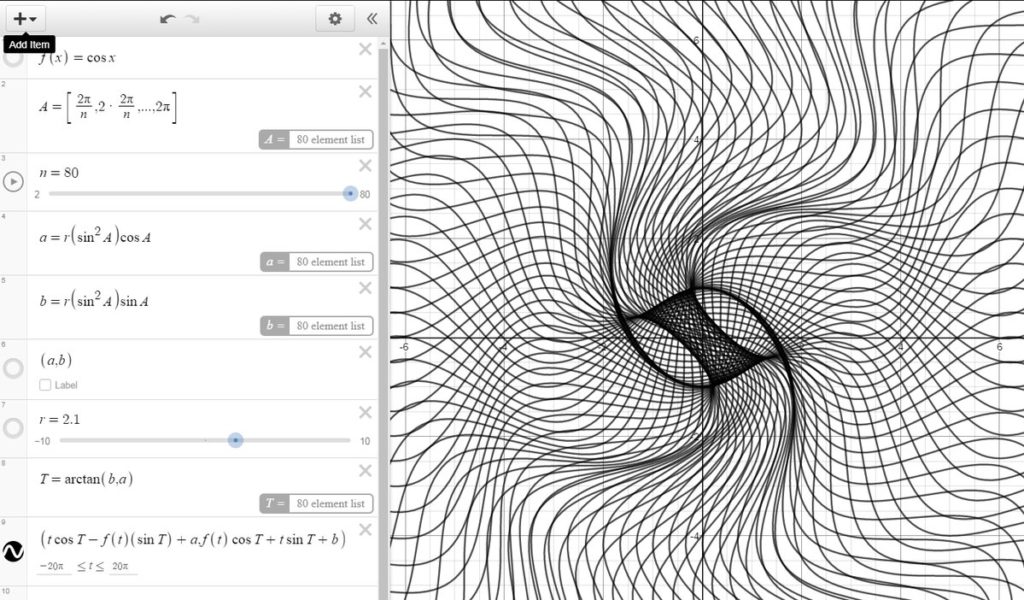

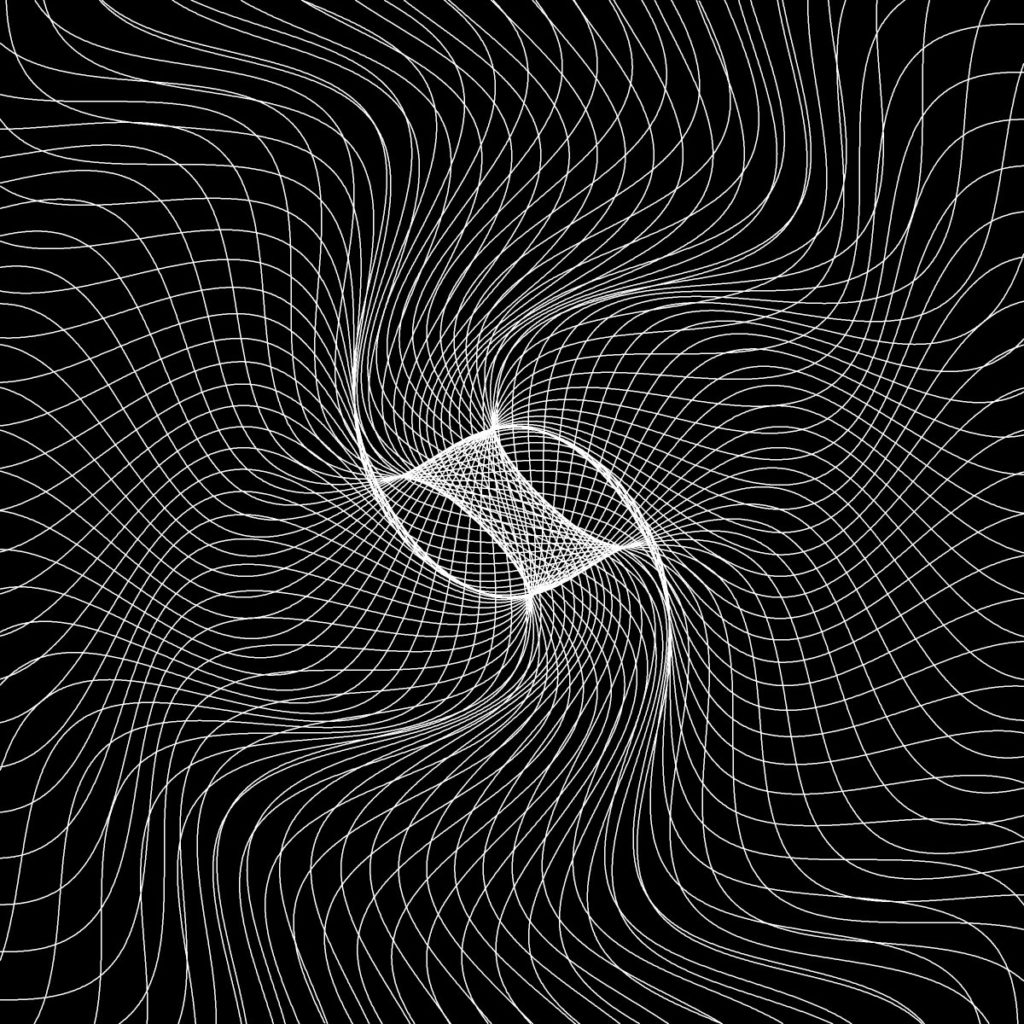

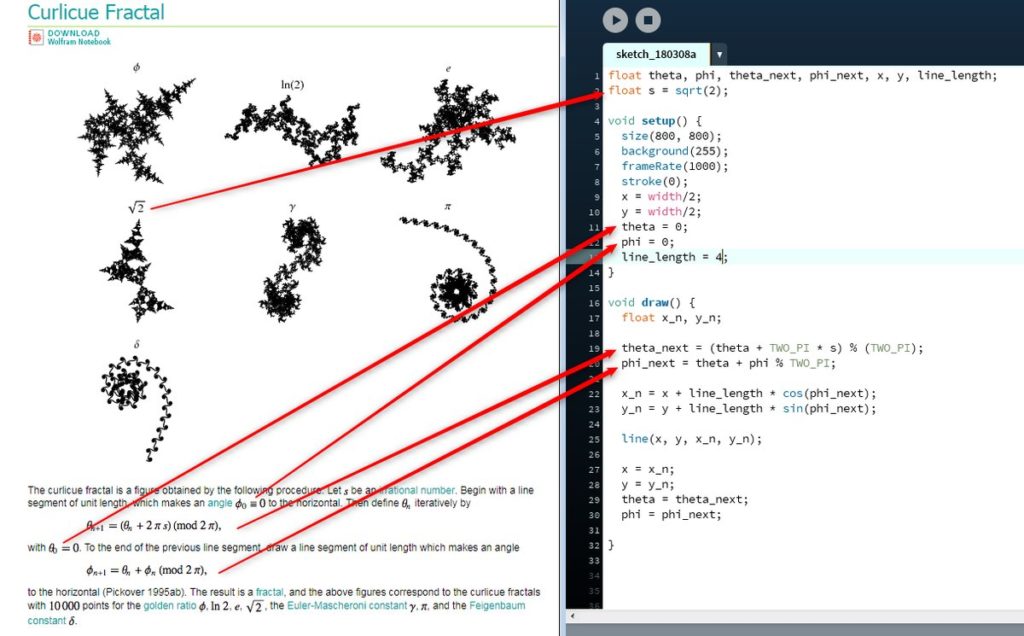

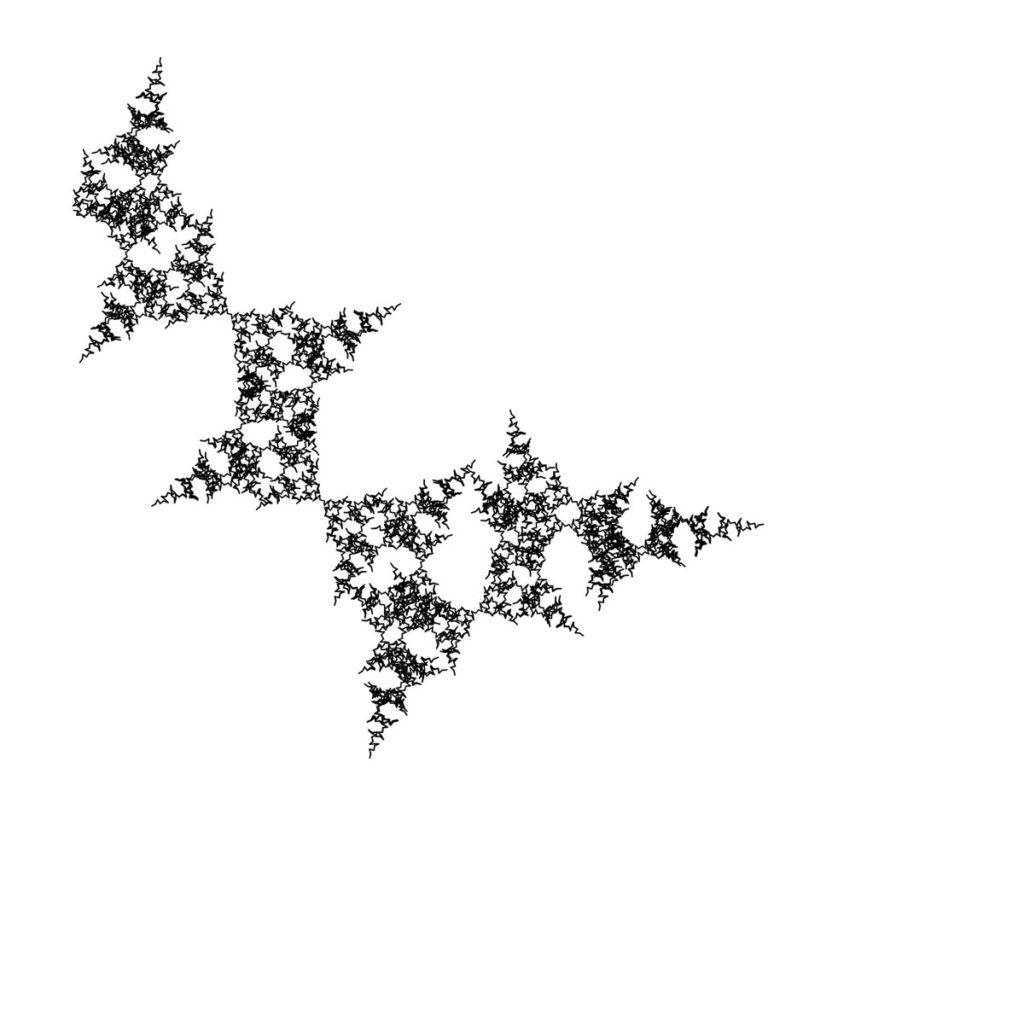

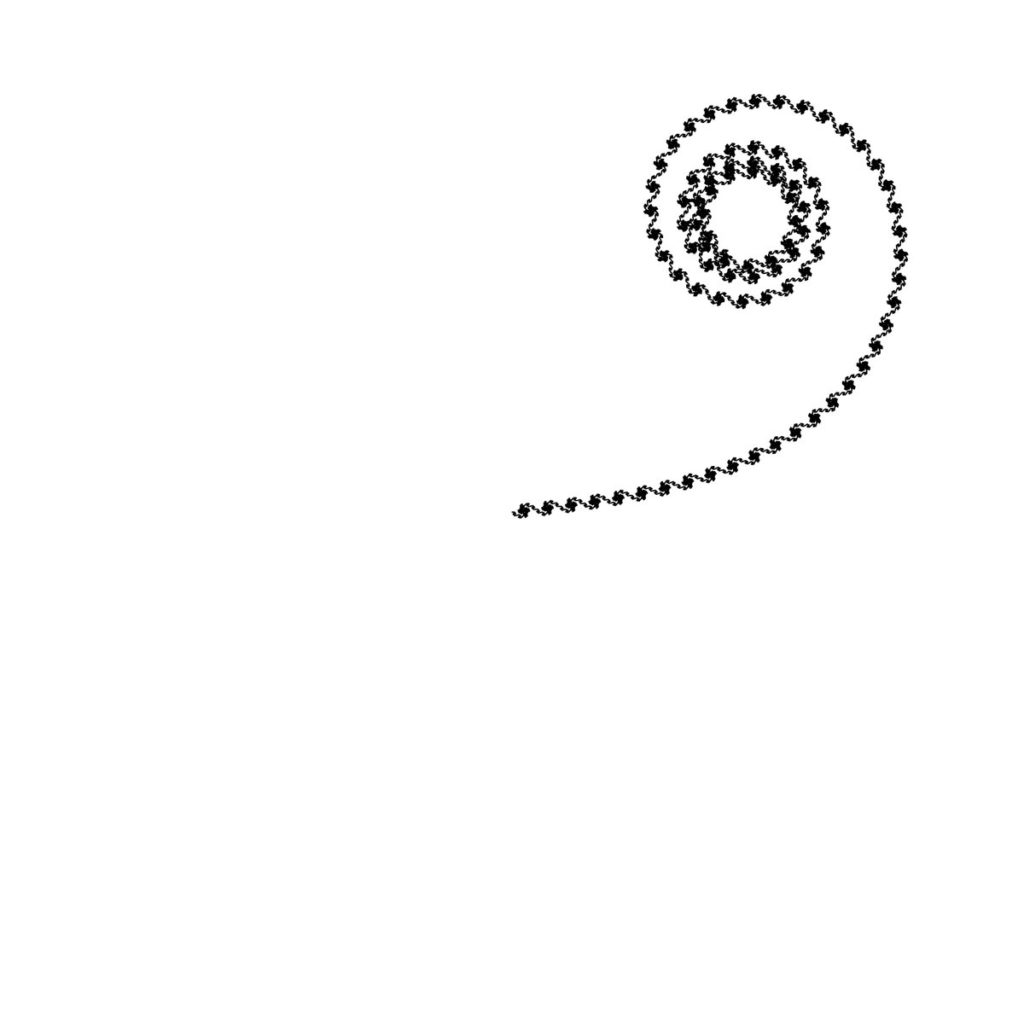

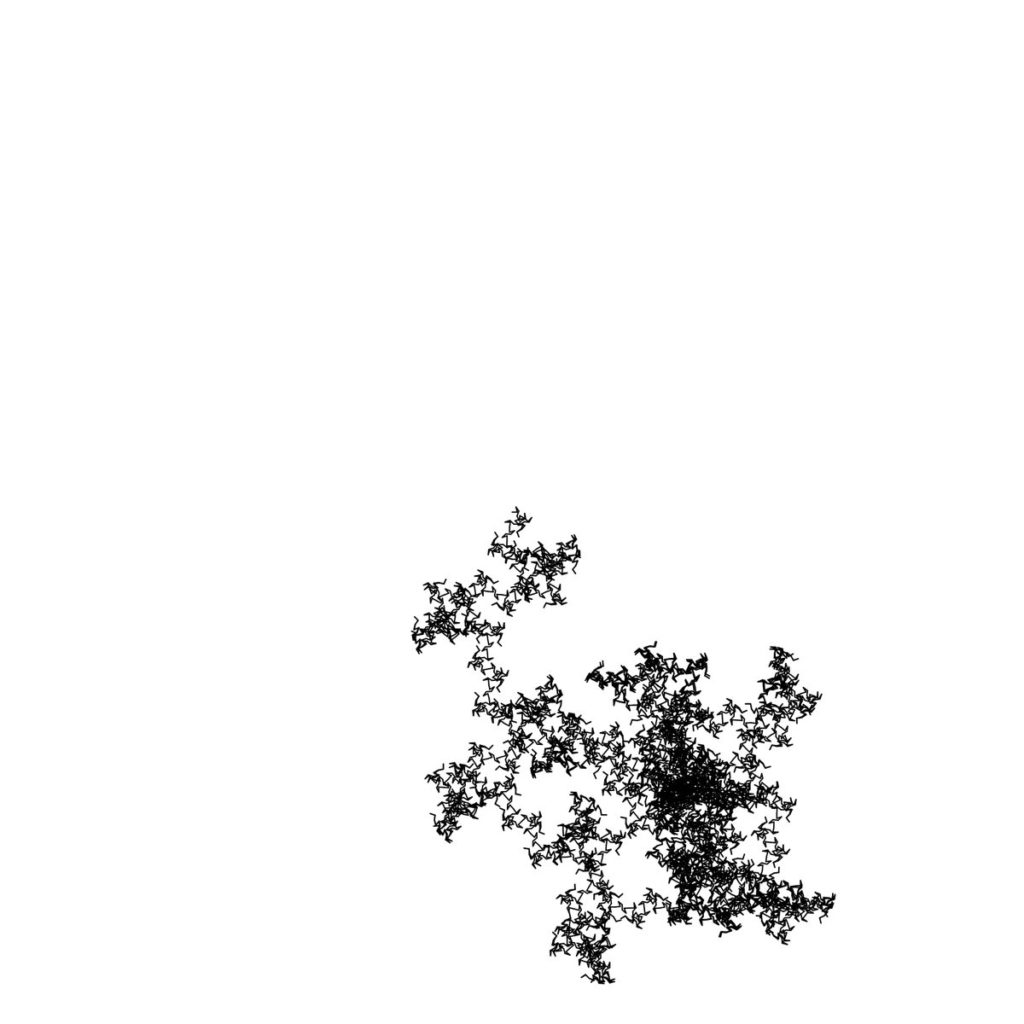

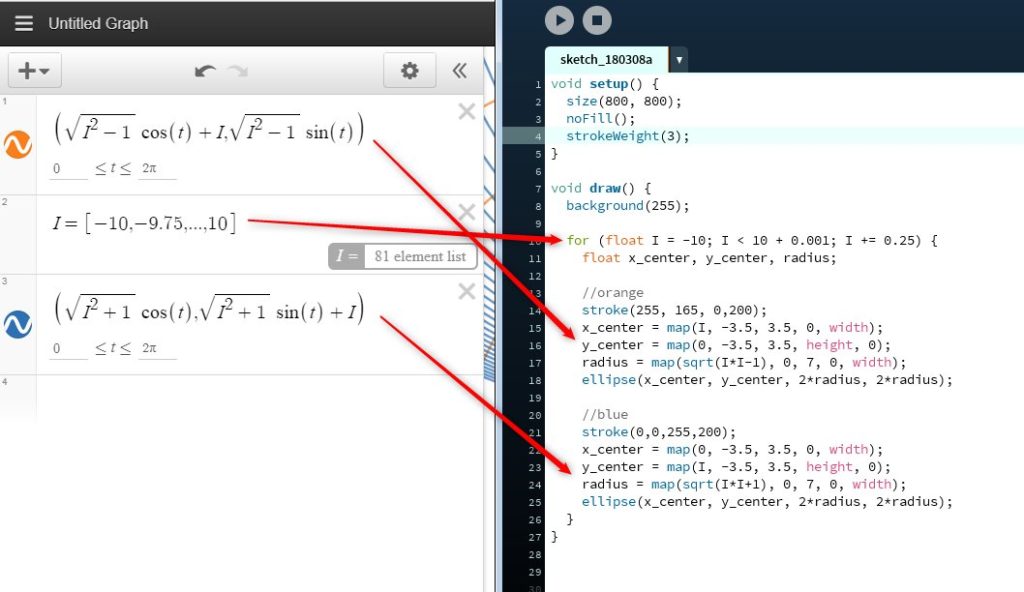

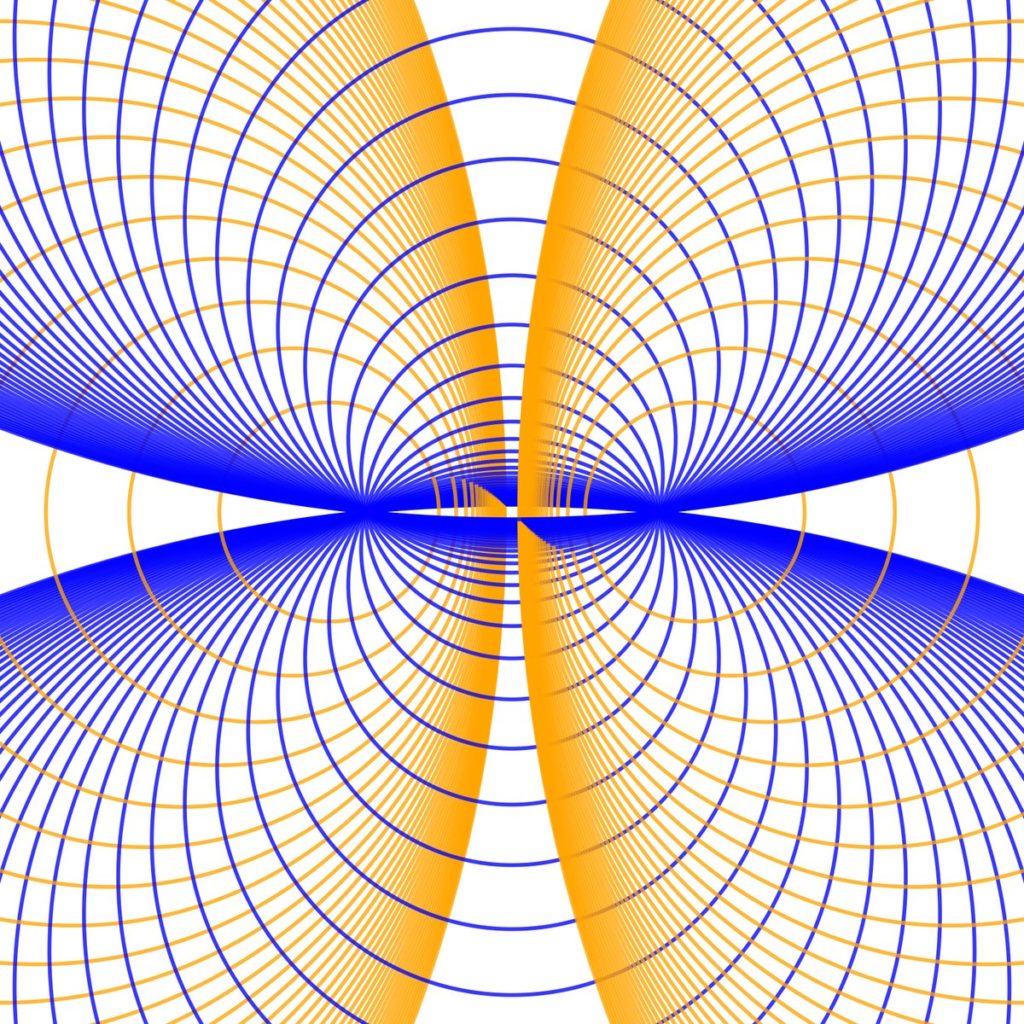

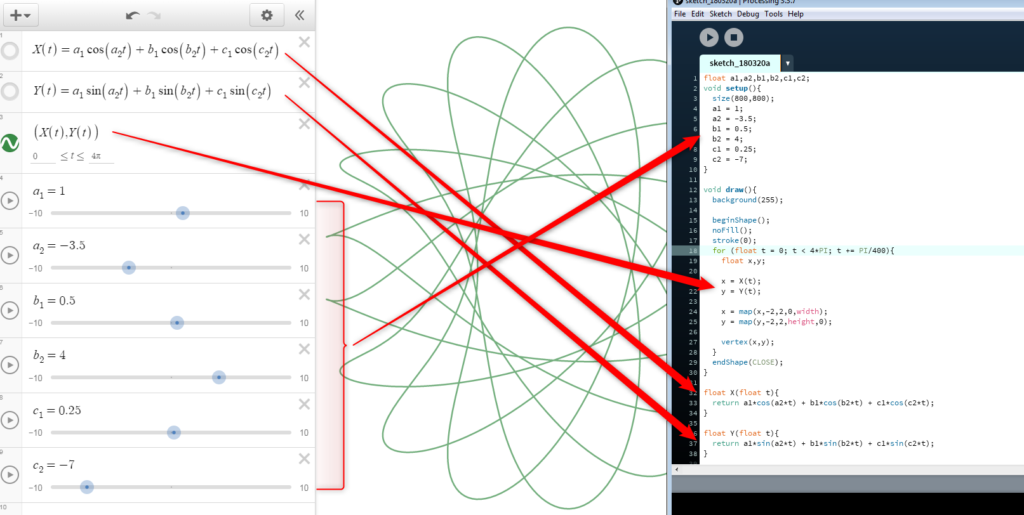

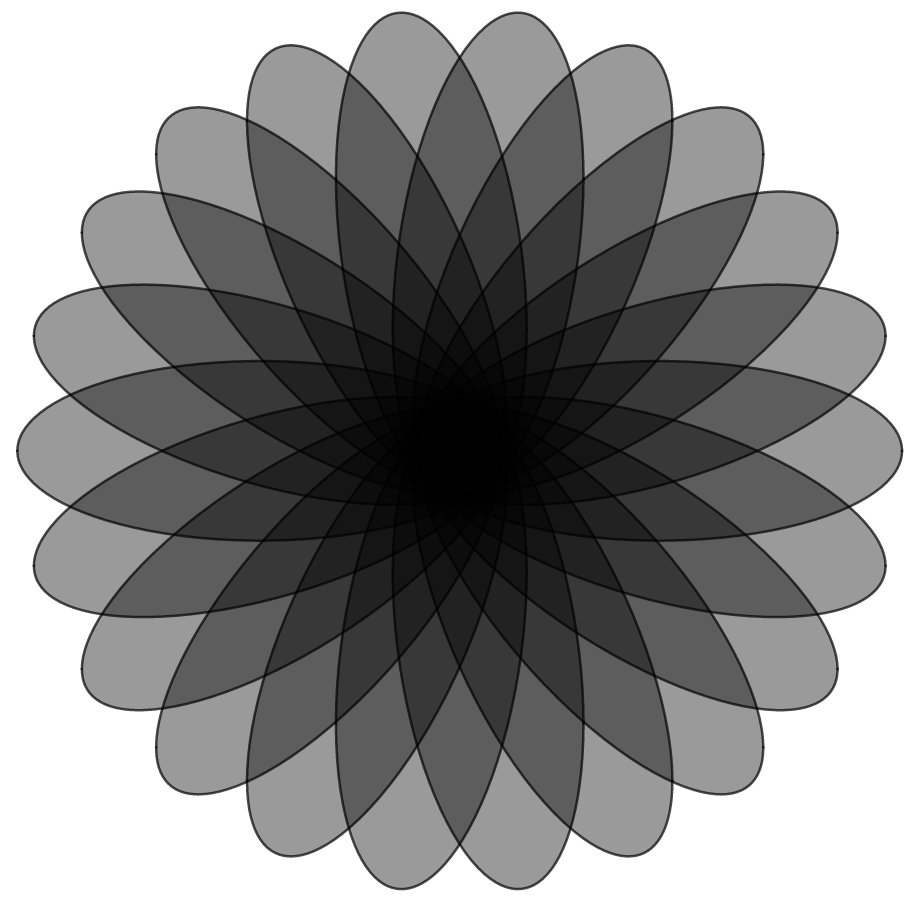

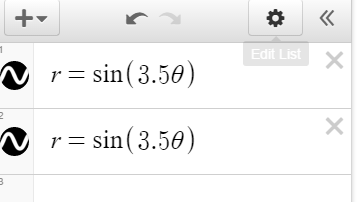

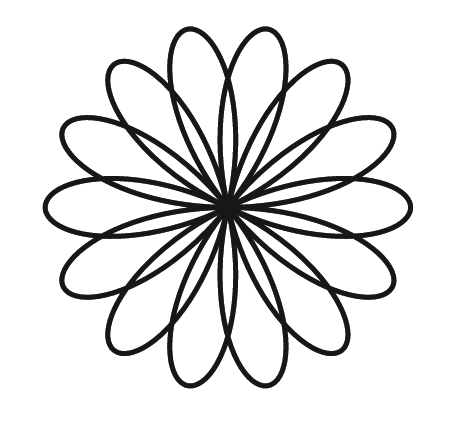

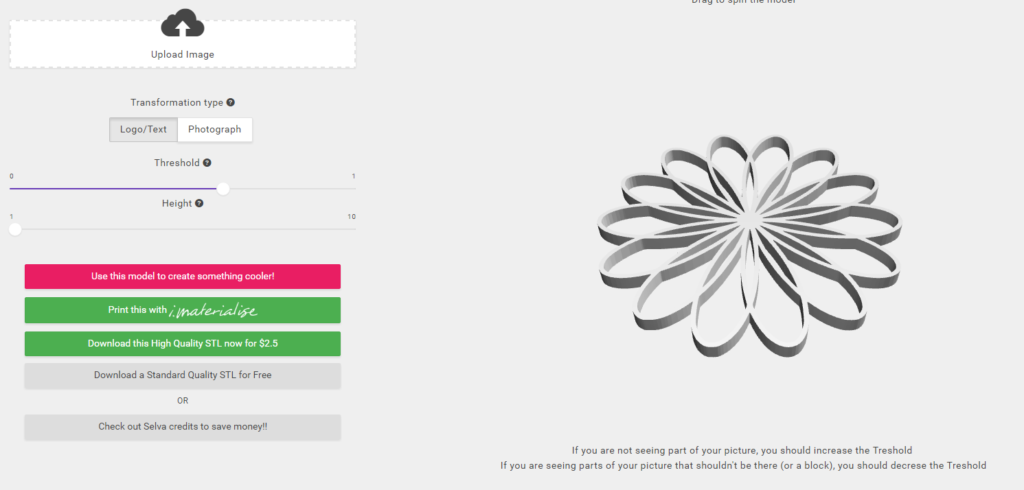

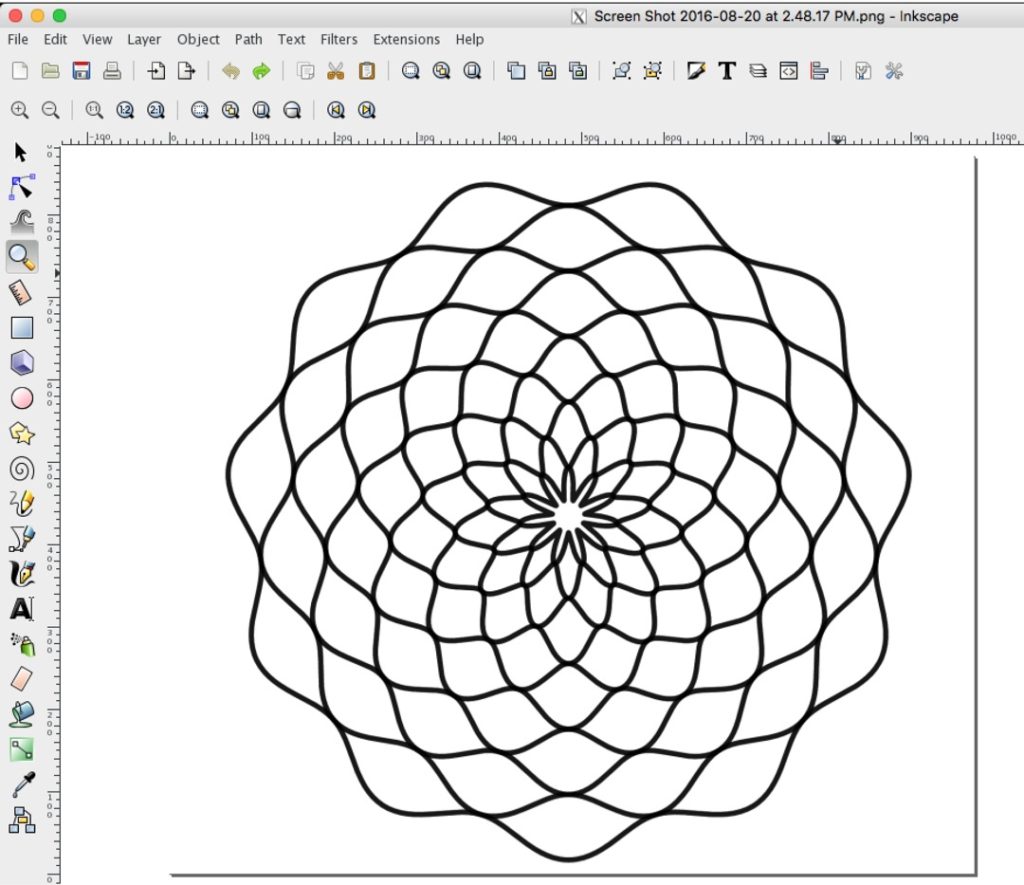

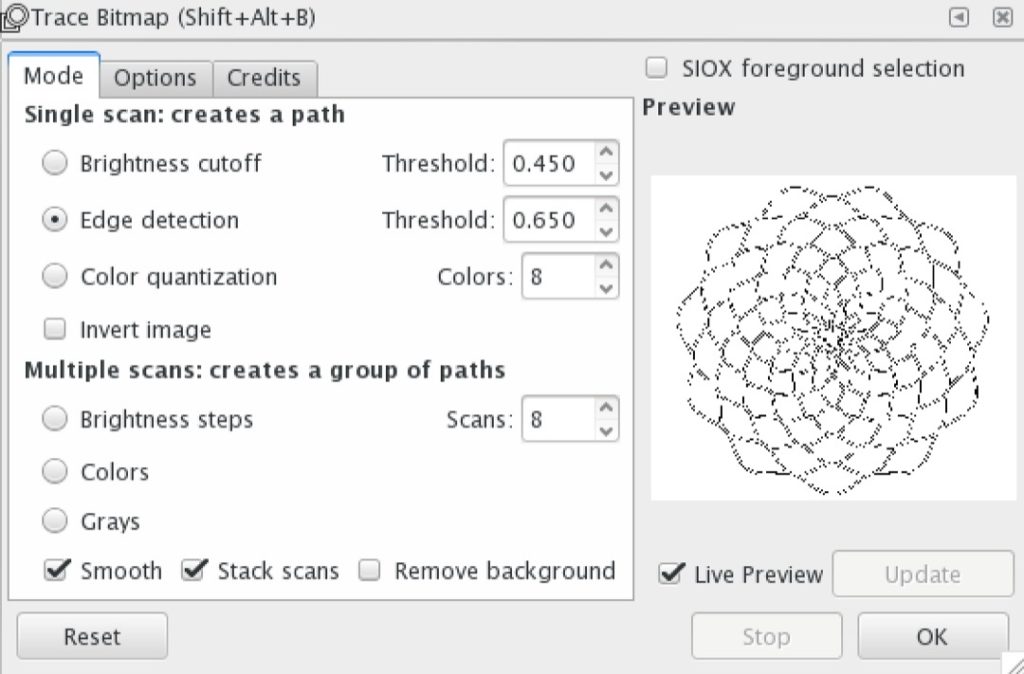

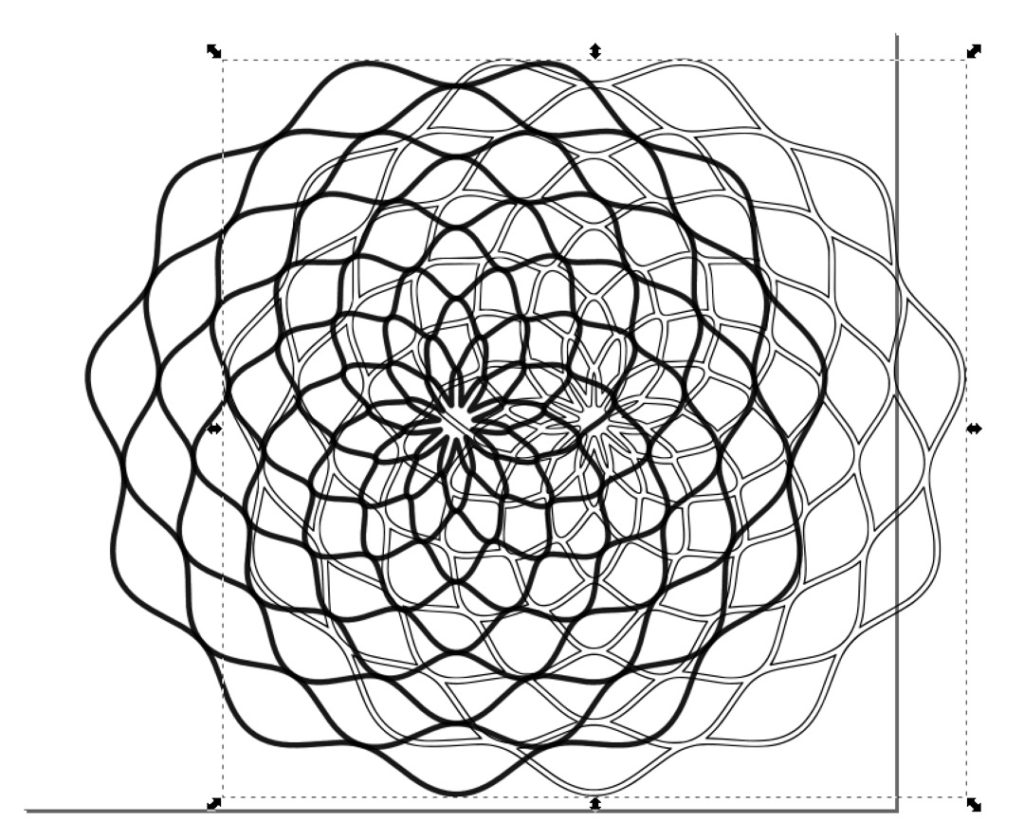

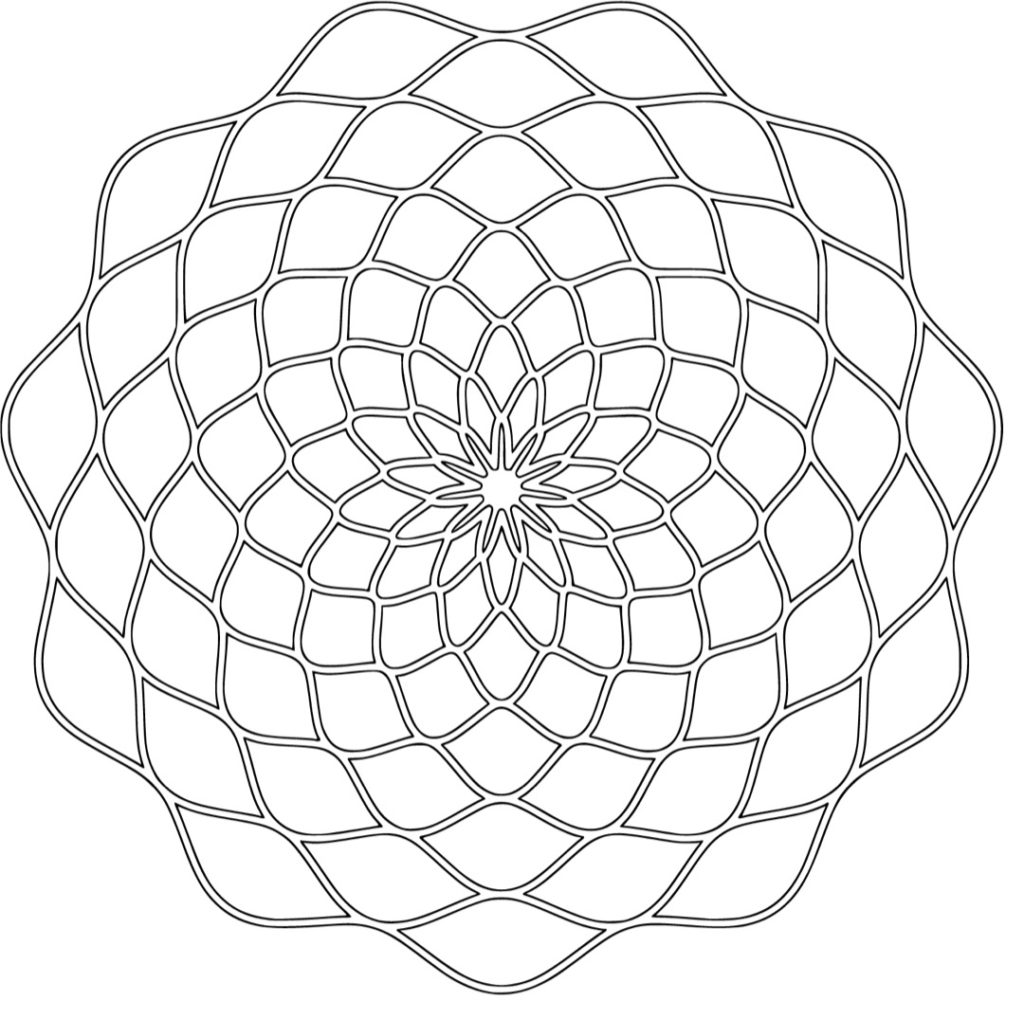

I also find a lot of value in solving a problem that someone else has already solved because then I can compare approaches to the solution. When I see a really cool math idea scroll across twitter, I often see if I can replicate that math with some sort of computer program. I’m not doing anything new to humanity, but it’s new to me, and that’s what’s important! Similarly, when we as math teachers can get kids genuinely interested in some variety of math (even if it’s not on the curriculum), we are making a difference. If it’s new to those kids, then that’s all that matters.